With today’s internet speed and accountabilities, there is not much reason to download an entire website for offline use. Maybe you need a copy of a site as backup or you need to travel somewhere remote, these tools will enable you to download the entire website for offline reading.

Continue ➤ 60 Best Websites To Download Free ePub and PDF EBooks

Here’s a quick list of some of the best websites downloading software programs to get you started. HTTrack is the best and has been the favorite of many for many years.

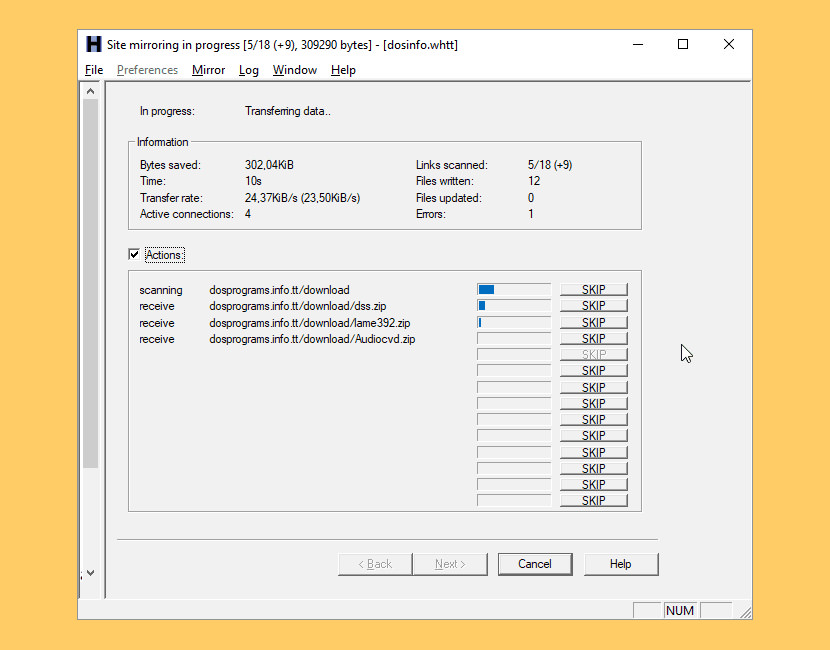

1. HTTrack

HTTrack is a free (GPL, libre/free software) and easy-to-use offline browser utility. It allows you to download a World Wide Web site from the Internet to a local directory, building recursively all directories, getting HTML, images, and other files from the server to your computer. HTTrack arranges the original site’s relative link-structure.

Simply open a page of the “mirrored” website in your browser, and you can browse the site from link to link, as if you were viewing it online. HTTrack can also update an existing mirrored site, and resume interrupted downloads. HTTrack is fully configurable, and has an integrated help system.

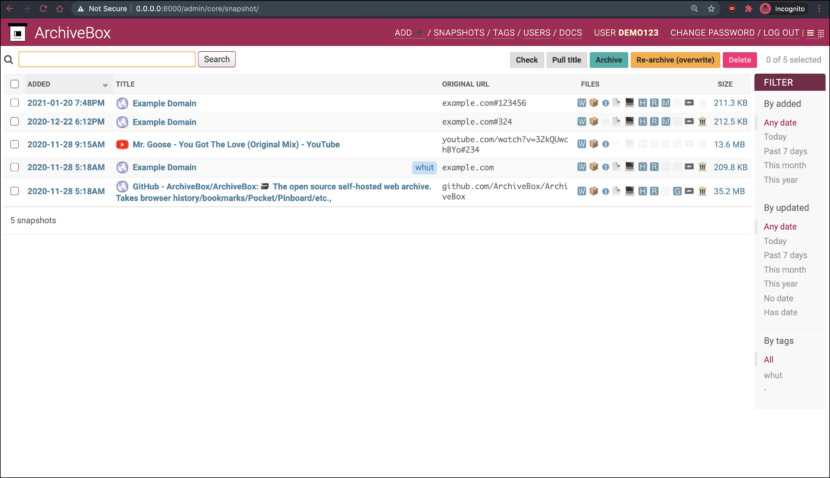

2. ArchiveBox

ArchiveBox is a powerful, self-hosted internet archiving solution to collect, save, and view sites you want to preserve offline. You can set it up as a command-line tool, web app, and desktop app (alpha), on Linux, macOS, and Windows. You can feed it URLs one at a time, or schedule regular imports from browser bookmarks or history, feeds like RSS, bookmark services like Pocket/Pinboard, and more. See input formats for a full list.

It saves snapshots of the URLs you feed it in several formats: HTML, PDF, PNG screenshots, WARC, and more out-of-the-box, with a wide variety of content extracted and preserved automatically (article text, audio/video, git repos, etc.). See output formats for a full list. The goal is to sleep soundly knowing the part of the internet you care about will be automatically preserved in durable, easily accessible formats for decades after it goes down.

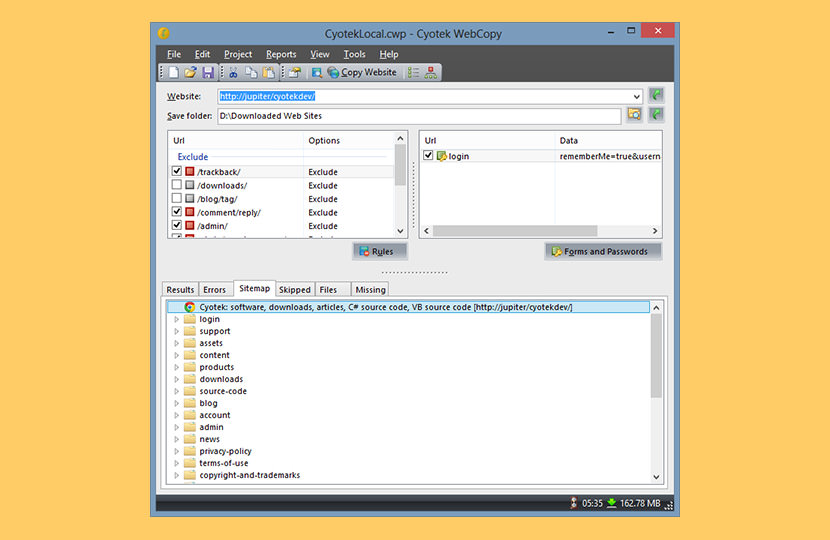

3. Cyotek WebCopy

Cyotek WebCopy is a free tool for copying full or partial websites locally onto your harddisk for offline viewing. WebCopy will scan the specified website and download its content onto your harddisk. Links to resources such as style-sheets, images, and other pages in the website will automatically be remapped to match the local path. Using its extensive configuration you can define which parts of a website will be copied and how.

WebCopy will examine the HTML mark-up of a website and attempt to discover all linked resources such as other pages, images, videos, file downloads – anything and everything. It will download all of these resources, and continue to search for more. In this manner, WebCopy can “crawl” an entire website and download everything it sees in an effort to create a reasonable facsimile of the source website.

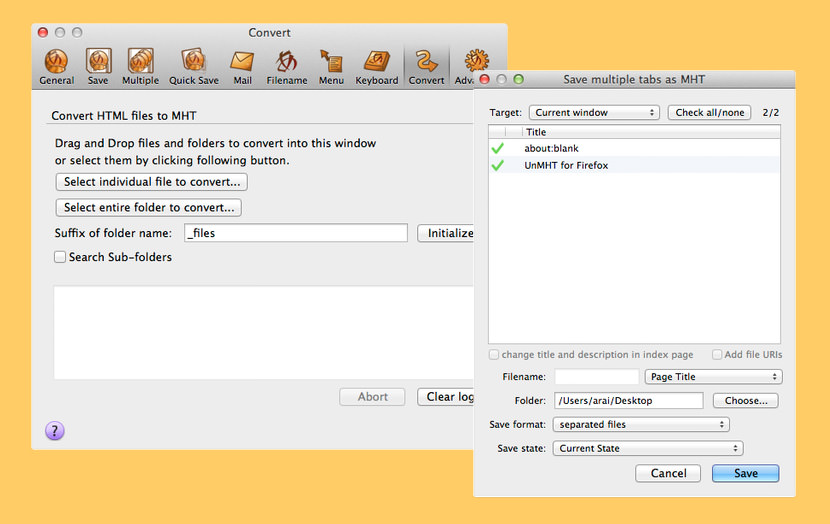

4. UnMHT

UnMHT allows you to view MHT (MHTML) web archive format files, and save complete web pages, including text and graphics, into a single MHT file in Firefox/SeaMonkey. MHT (MHTML, RFC2557) is the webpage archive format to store HTML and images, CSS into a single file.

- Save webpage as MHT file.

- Insert URL of the webpage and date you saved into saved MHT file.

- Save multiple tabs as MHT files at once.

- Save multiple tabs into a single MHT file.

- Save webpage by single click into prespecified directory with Quick Save feature.

- Convert HTML files and directory which contains files used by the HTML into MHT file.

- View the MHT file saved by UnMHT, IE, PowerPoint, etc.

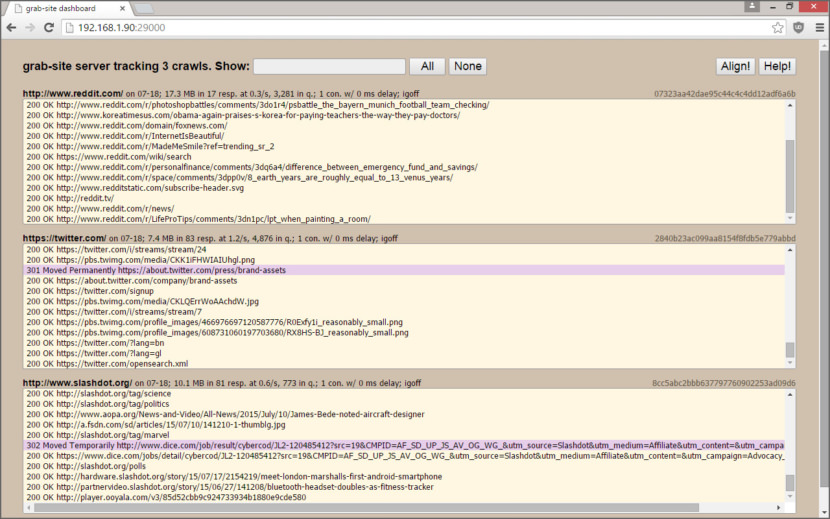

5. grab-site

grab-site is an easy pre configured web crawler designed for backing up websites. Give grab-site a URL and it will recursively crawl the site and write WARC files. Internally, grab-site uses a fork of wpull for crawling. grab-site is a crawler for archiving websites to WARC files. It includes a dashboard for monitoring multiple crawls, and supports changing URL ignore patterns during the crawl.

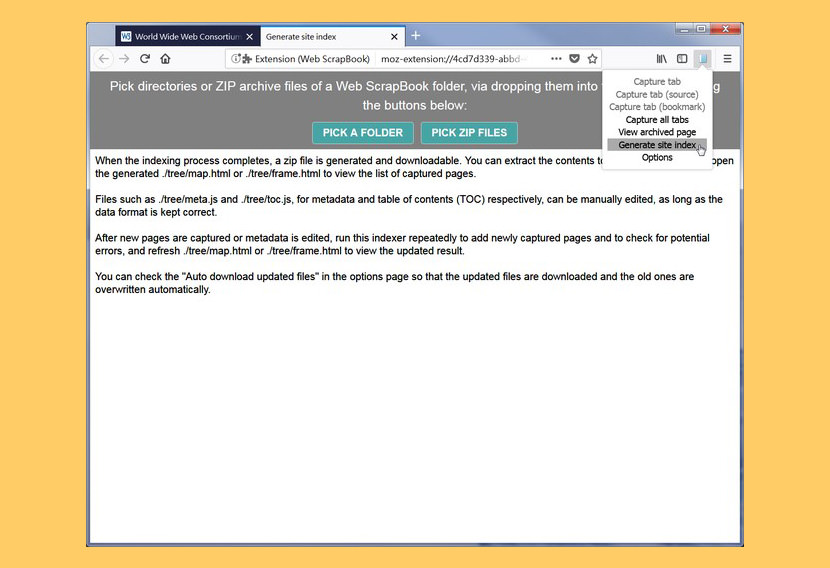

6. WebScrapBook

WebScrapBook is a browser extension that captures the web page faithfully with various archive formats and customizable configurations. This project inherits from legacy Firefox addon ScrapBook X. A web page can be saved as a folder, a zip-packed archive file (HTZ or MAFF), or a single HTML file (optionally scripted as an enhancement). An archive file can be viewed by opening the index page after unzipping, using the built-in archive page viewer, or with other assistant tools.

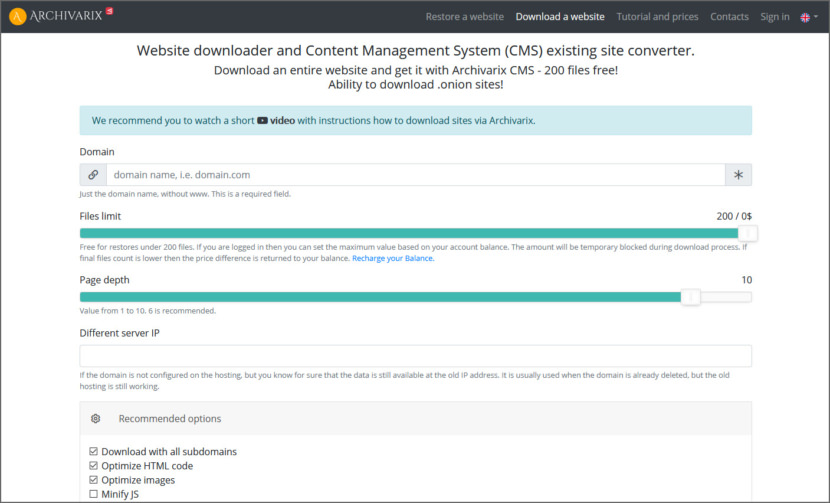

7. Archivarix

Website downloader and Content Management System (CMS) existing site converter. Download an entire live website – 200 files free! Ability to download .onion sites! Their Website downloader system allows you to download up to 200 files from a website for free. If there are more files on the site and you need all of them, then you can pay for this service. Download cost depends on the number of files. You can download from existing websites, Wayback Machine or Google Cache.

8. Website Downloader

[ Not Free Anymore ] Website Downloader, Website Copier or Website Ripper allows you to download websites from the Internet to your local hard drive on your own computer. Website Downloader arranges the downloaded site by the original website’s relative link-structure. The downloaded website can be browsed by opening one of the HTML pages in a browser.

After cloning a website to your hard drive you can open the website’s source code with a code editor or simply browse it offline using a browser of your choosing. Site Downloader can be used for multiple different purposes. It’s truly simple to use website download software without downloading anything.

- Backups – If you have a website, you should always have a recent backup of the website in case the server breaks or you get hacked. Website Downloader is the fastest and easiest option to take a backup of your website, it allows you to download whole website.

- Offline Website Downloader – Download website offline for your future reference, which you can access even without an internet connection, say. when you are on a flight or an island vacation!

You can also try this online downloader archivarix

its paid

This is a great resource! Thank you. A decade ago, I used HHTrack and it worked great, but I totally forgot about it.

Wow Thanks a bunch, I had forgotten the name because i mostly used it in my old PC. Cyotek Really works the Best and better fine. I first used htttrack and it would give me nothing better than this

Maybe consider adding A1 Website Download for Windows and Mac? It can download large websites and comes with many options including both simple and complex “limit to” and “exclude” filters. The program is shareware with fully functional 30 days free trial with a “free mode” for up to 500 pages.

#3 ( Website Downloader) is NOT free. After downloading a website in your machine (and it doesn’t tell you where they are) then it asks you to pay.

Yes. After 30 days it only for for 500 pages. Regarding where A1WD places files, it is among the first options always visible when you start the software. “Scan website | Paths | Save files crawled to disk directory path”. (In addition when viewing the downloaded results, you can see the individual path of all files downloaded two places. Left sidebar and at top.)

Site Snatcher allows you to download websites so they’re available offline. Simply paste in a URL and click Download. Site Snatcher will download the website as well as any resources it needs to function locally. It will recursively download any linked pages up to a specified depth, or until it sees every page.

#7 Website Downloader is NOT free but $20/month

Nothing of that will work in 2022. Most of these software were designed and last updated in 2017. Addons are not supported by modern browser anymore. HTTrack supports only http and not https – useless.

I’m downloading https site right now with WinHTTrack Website Copier 3.48-21

I been using WinHTTrack since forever and am still using it.